Will AI save us or kill us, or both?

Will AI save us or kill us, or both?

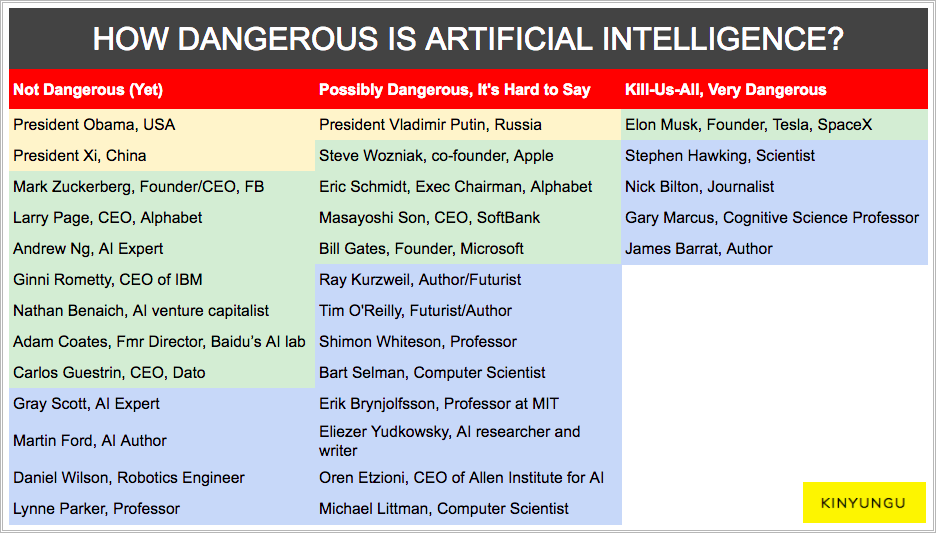

Look at what these 30+ key business leaders, world leaders, and AI experts have to say, and you will get everything from “we’re summoning the demon,” (Elon Musk) to “with AI especially, I am really optimistic,” (Mark Zuckerberg). The actual quotes are below, but here’s where they stack up.

(In case you were wondering, I’ve notated heads of state in yellow, tech leaders in green, and academics/thinkers in blue)

Please note that the folks who don’t believe AI to be dangerous say so with the disclaimer (yet). It’s too early to call it dangerous. I like Andrew Ng’s analogy to electricity.

Just as electricity transformed almost everything 100 years ago, today I actually have a hard time thinking of an industry that I don’t think AI (Artificial Intelligence) will transform in the next several years. –Andrew Ng

Is electricity dangerous? Yes. Has it been weaponized? Yes. Has it been abused and accidentally misused? Yes. But that doesn’t mean we shouldn’t move forward.

Nonetheless, it’s good to understand why really smart people are worried. 4 key AI dangers emerge:

- Sinister AI. Just like any technology, in the wrong hands, AI can be utilized to unleash all sorts of damage. For example, imagine using AI to program autonomous drone weapons that could use facial recognition to carry out ethnic cleansing. While AI as a technology is no more sinister than hydrogen atoms or a piece of lead, it can be harnessed by terrorists for maximal damage.

- Stupid AI. More accurately, this is really a human accidentally (or stupidly) programming the AI to be incomplete. If you tell your AI-powered vehicle to “get me to the airport as fast as possible,” but it doesn’t know that running over humans is not a constraint…

- Solid AI. This is, in some ways, the “ideal” scenario – AI is increasingly beneficial and effective at doing exactly what it’s supposed to do. However, AI also effectively outsources all manual, routine, and algorithm-based labor out of human life. From architects to doctors to truck drivers to waiters, this is the destruction of millions of jobs. Now, there’s sky-rocketing unemployment. And only the rich can acquire new skills Matrix-style by plugging their brain into the latest VR + AI training programs. The rest of us get left in the dust, obsolete, unskilled, and without a purpose – what could possibly go wrong?

- Superintelligent AI. AI gets so much smarter, so much faster than we we can’t even begin to predict how it’ll outsmart us. Add Hollywood drama to this, and you’ve got the iRobot / Terminator scenario when AI’s somehow realize that humans are expendable or dangerous to their existence and build an army to destroy us. Most people would say this isn’t a realistic

The likelihood that 1 through 3 will happen is probably in the 99% range. Make no mistake: we will have AI exploited by terrorists, accidents from fully-deployed, half-baked AI, and perfectly-working AI that will kill jobs. The question is whether terrorists can use it more like a grenade or nuclear bomb? Will an occasionally errant drone run into a kid or will an errant algo explode all our cars? Does AI off a few jobs in niche areas or jobs for 1/3 of the population?

Okay, so here are the quotes (all are sourced here).

AI is Not Dangerous (Yet)

It promises to create a vastly more productive and efficient economy. If properly harnessed, it can generate enormous prosperity and opportunity. But it also has some downsides that we’re gonna have to figure out in terms of not eliminating jobs. It could increase inequality. It could suppress wages. –President Obama

I have pretty strong opinions on this. I am optimistic. I think you can build things and the world gets better. But with AI especially, I am really optimistic… And I think people who are naysayers and try to drum up these doomsday scenarios — I just, I don’t understand it. It’s really negative and in some ways I actually think it is pretty irresponsible. In the next five to 10 years, AI is going to deliver so many improvements in the quality of our lives. —Mark Zuckerberg, Founder/CEO, Facebook

Artificial intelligence would be the ultimate version of Google. The ultimate search engine that would understand everything on the Web. It would understand exactly what you wanted, and it would give you the right thing. We’re nowhere near doing that now. However, we can get incrementally closer to that, and that is basically what we work on. –Larry Page, CEO, Alphabet

We need to speed up building China into a strong country with advanced manufacturing, pushing for deep integration between the real economy and advanced technologies including internet, big data, and artificial intelligence. –President Xi (China)

Some people call this artificial intelligence, but the reality is this technology will enhance us. So instead of artificial intelligence, I think we’ll augment our intelligence. –Ginni Rometty, CEO of IBM

Just as electricity transformed almost everything 100 years ago, today I actually have a hard time thinking of an industry that I don’t think AI (Artificial Intelligence) will transform in the next several years. Much of the discussion about AI regulation stems from irrational fears about “sentient AI” or “evil killer robots,” rather than a deeper understanding of what it can and cannot do. Because AI today is still immature and is developing rapidly, heavy-handed regulation by any country will stunt that country’s AI progress. –Andrew Ng, AI Expert

I reckon there’s a chance for near complete automation of core, repetitive businesses functions in the future. —Nathan Benaich, AI venture capitalist

This is about really changing our relationship with the devices we use and the way we get things done, and making them much faster and taking away all the training that we have to do for ourselves. –Adam Coates, Former Director of Baidu’s Silicon Valley AI lab

I don’t think that an artificially intelligent system that has superhuman intelligence will be violent. I do think that it will disrupt our culture. –Gray Scott, AI Expert

I think that we’re getting to the point where we’re certainly going to see a much bigger impact than we have in the past, and it’s really about machines and computers finally approaching the point where they’re able to really substitute for a lot of things that people used to do on a much more broad basis than has been the case in the past. –Martin Ford, AI Author

I think what’s going to be most profound is our impact, positive and negative but mostly positive, on the kind of almost symbiotic relationship between humans and technology. —Carlos Guestrin, CEO, Dato

I absolutely don’t think a sentient artificial intelligence is going to wage war against the human species. –Daniel Wilson, Robotics Engineer

AI could open us up to the ability to be creative and to really think broadly because it can relieve us of some meaningless jobs. –Lynne Parker, Professor

AI is possibly dangerous, it’s too early to tell

The so-called control problem that Elon is worried about isn’t something that people should feel is imminent…We shouldn’t panic about it. Bill Gates, Founder, Microsoft

Artificial intelligence is the future, not only for Russian, but for all of humankind. It comes with colossal opportunities, but also threats that are difficult to predict. Whoever becomes the leader in this sphere will become the ruler of the world. –President Vladimir Putin

I think that both the Russian and the Chinese leaders have recognised the value of this, not just for their commercial aspirations, but also their military aspirations. –Eric Schmidt, Executive Chairman, Alphabet

I believe this artificial intelligence is going to be our partner. If we misuse it, it will be a risk. If we use it right, it can be our partner. –Masayoshi Son, CEO, SoftBank

[WIRED MAGAZINE interviewing Steve Wozniak] A few years ago you warned that artificially intelligent robots would turn humans into their pets. This week, you said you had changed your mind. How did you get over this fear?

This originally started as I was extrapolating the ways that you can talk to your phone, and the ways it talks back. It’s becoming more like a person. What if this trend continues, and the AI develops conscious-type thinking? That worried me, and I spoke about it for a couple years, and was joined by smart people like Elon Musk and Stephen Hawking. But then I started thinking about a lot of the issues that come along with making an AI. We don’t really even know what intelligence is. You have a lot of people who study the brain, and all they can say is some processes are governed in certain places. But they don’t know how all those processes are wired together. They don’t even know where memory is stored! So, I’m not convinced that we’re really going to get to the point where they really can make an artificial brain. Not at the general level human brains work, like with intuition. A computer could figure out a logical endpoint decision, but that’s not the way intelligence works in humans. Well, I’m not gonna say they cannot do it. But every bit of tech we’ve ever built is for helping people in different ways. Technology is designed to be something good in life. So, I believe optimistically that the robots we’re building are going to help us have better human lives. –Steve Wozniak, co-founder, Apple

My view is not that AI is going to displace us. It’s going to enhance us. It does already.” Machines will follow a path that mirrors the evolution of humans. Ultimately, however, self-aware, self-improving machines will evolve beyond humans’ ability to control or even understand them. —Ray Kurzweil, Author/Futurist

We tend to be afraid of new technology and we tend to demonize it, but to me, you have to use it as an opportunity for introspection. Our fears ultimately should be of ourselves and other people. —Tim O’Reilly, Futurist/Author

There are lots of examples of routine, middle-skilled jobs that involve relatively structured tasks, and those are the jobs that are being eliminated the fastest. Those kinds of jobs are easier for our friends in the artificial intelligence community to design robots to handle them. They could be software robots; they could be physical robots. —Erik Brynjolfsson, Professor at MIT

I really think in the future we are all going to be cyborgs. I think this is something that people really underestimate about AI. They have a tendency to think, there’s us and then there’s computers. Maybe the computers will be our friends and maybe they’ll be our enemies, but we’ll be separate from them. I think that’s not true at all, I think the human and the computer are really, really quickly becoming one tightly-coupled cognitive unit. Imagine how much more productive we would be if we could augment our brains with infallible memories and infallible calculators.

Society is already wrestling with difficult questions about privacy and security that have been raised by the internet. Imagine when the internet is in your brain, if the NSA can see into your brain, if hackers can hack into your brain. Imagine if skills could just be downloaded — what’s going to happen when we have this kind of AI but only the rich can afford to become cyborgs, what’s that going to do to society?” —Shimon Whiteson, Associate Professor, University of Amsterdam

The US and I think most of the world has pushed hard on this idea of knowledge workers — you should get an education, you should educate yourself and stay ahead of the changing world. That may become actually become somewhat difficult. It’s a sudden switch, when something becomes cheaper, when the self-driving car becomes cheaper than the human driver, immediately the whole system will flip around, and say just sell self-driving cars.

When a AI-based medical doctor becomes cheaper, why not switch all medical doctors to smart computer programs and have a few remaining human specialists for very special cases. That’s sort of one of the risks that AI people are worried about. It’s a societal risk. Society will have to adapt. How we will adapt is not fully clear yet. But I think it’s something we’ll have to think about. —Bart Selman, Computer Scientist, Cornell University

Anything that could give rise to smarter-than-human intelligence—in the form of Artificial Intelligence, brain-computer interfaces, or neuroscience-based human intelligence enhancement – wins hands down beyond contest as doing the most to change the world. Nothing else is even in the same league. –Eliezer Yudkowsky, AI researcher and writer

Another reason why we shouldn’t concern ourselves with Skynet and doomsday scenarios is because we have a lot more realistic and pressing problems to worry about. And that, for example, is AIs impact on jobs. That’s a very real concern. –Oren Etzioni, CEO of Allen Institute for Artificial Intelligence

We can turn machines into workers — they can be labor, and that actually deeply undercuts human value. My biggest concern at the moment is that we as a society find a way of valuing people not just for the work they do. –Michael Littman, Computer Scientist

AI is VERY dangerous and will kill us all

AI is a fundamental existential risk for human civilization, and I don’t think people fully appreciate that…The risk of something seriously dangerous happening is in the five-year timeframe. 10 years at most…With artificial intelligence we’re summoning the demon. –Elon Musk

The development of full artificial intelligence could spell the end of the human race….It would take off on its own, and re-design itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.

Everything that civilisation has to offer is a product of human intelligence; we cannot predict what we might achieve when this intelligence is magnified by the tools that AI may provide, but the eradication of war, disease, and poverty would be high on anyone’s list. Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last. –Stephen Hawking

I don’t want to really scare you, but it was alarming how many people I talked to who are highly placed people in AI who have retreats that are sort of ‘bug out’ houses, to which they could flee if it all hits the fan. –James Barrat, Author of Our Final Invention: Artificial Intelligence and the End of the Human Era

The upheavals [of artificial intelligence] can escalate quickly and become scarier and even cataclysmic. –Nick Bilton, Journalist

Once computers can effectively reprogram themselves, and successively improve themselves, leading to a so-called “technological singularity” or “intelligence explosion,” the risks of machines outwitting humans in battles for resources and self-preservation cannot simply be dismissed. –Gary Marcus, Cognitive Science Professor

(note: all quotes are sourced in this google doc)

Links:

- A ton more about AI safety

- I just coded my first AI Algo, and this is how it felt

- 4 Reasons China will beat the US in the AI Race

- 5 Ways To Make Sense of the AI Hype

- AI Start-Ups got more funding in 2017 than in the last 10 years combined